Addressing the Color Computer 3 DMA operation challenges via hardware modification, while pretty simple, limits initial adoption. It places the technique into a classic “chicken and egg” situation. The CoCo3 provides an ideal platform for DMA capabilities, but owners will only perform hardware modification if highly desired peripheral capability demands it. Hardware designers, for their part, prefer to focus energy on innovative peripheral design that targets common CoCo3 hardware configurations. What if we could shortcut the process by implementing a solution that does not require hardware alteration?

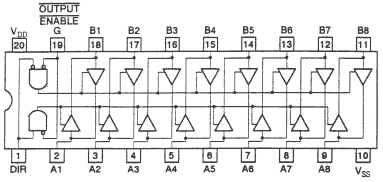

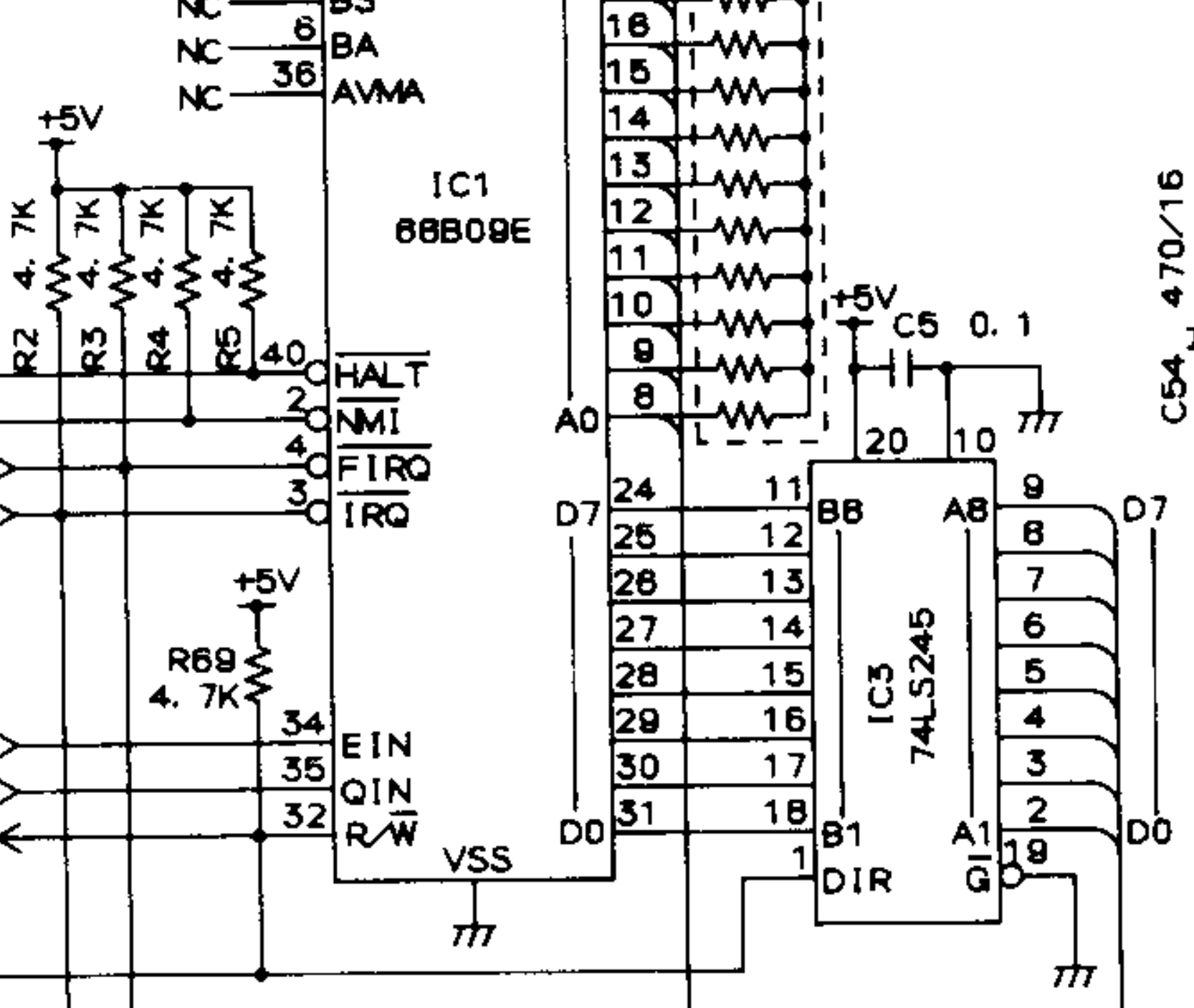

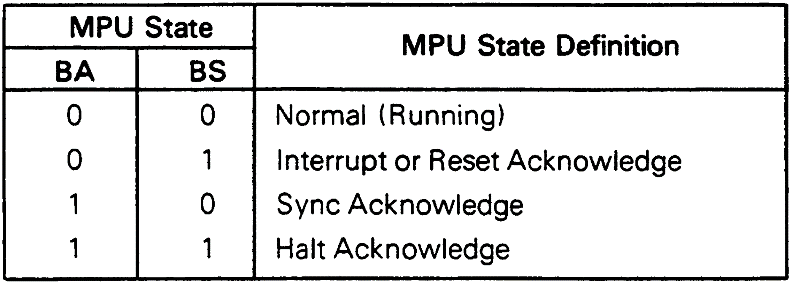

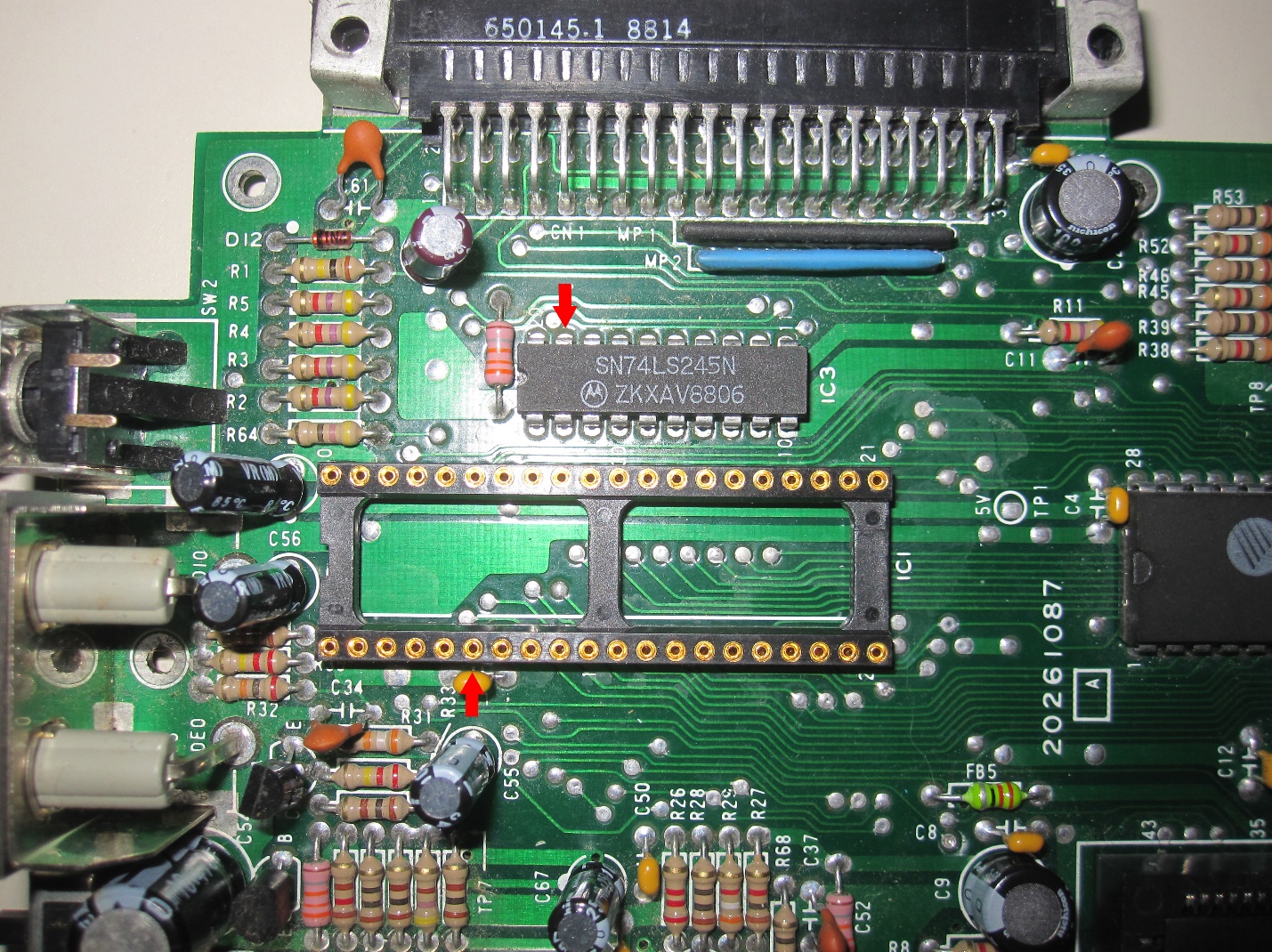

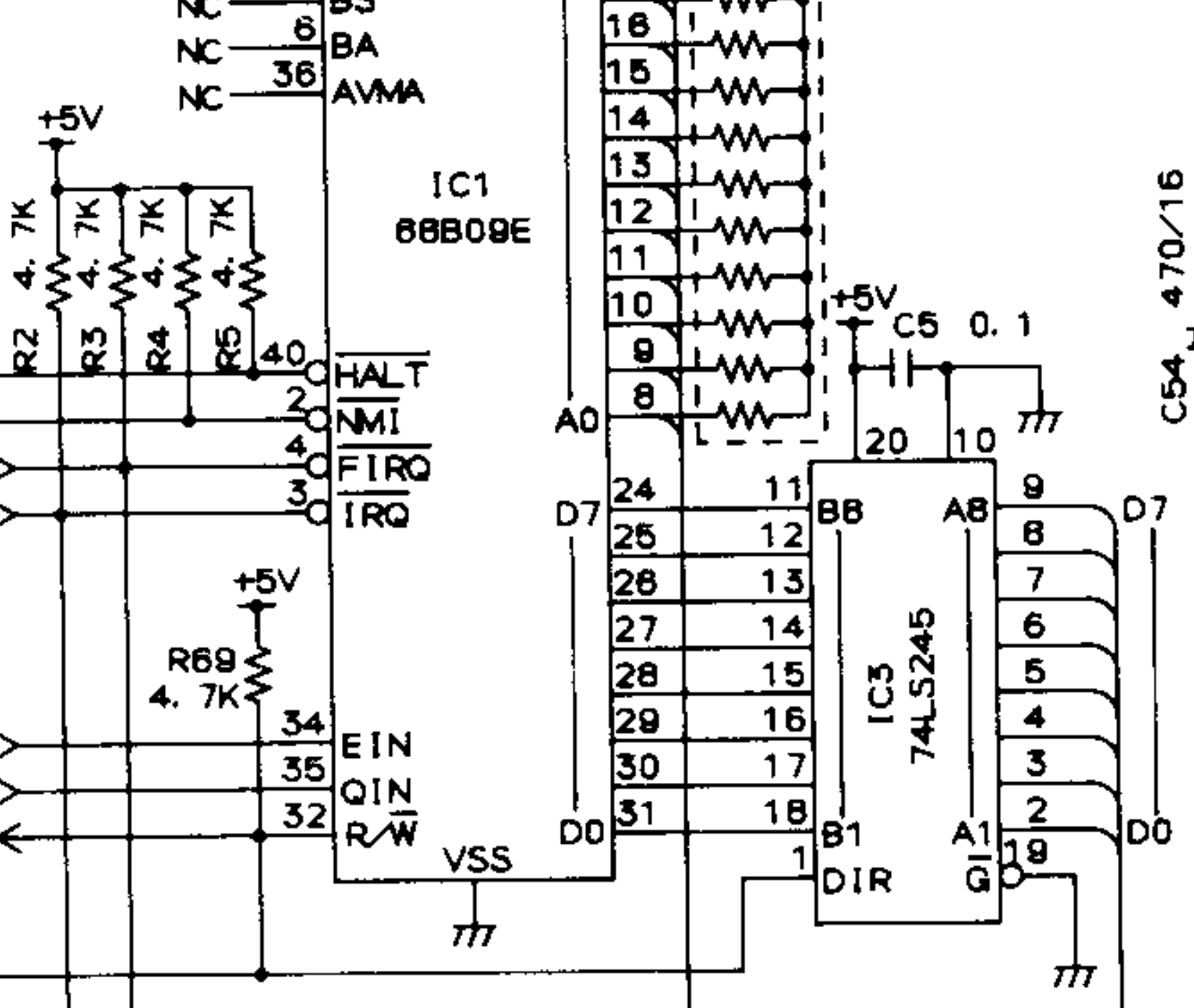

Return your attention to the portion of the schematic showing the M68B09E data bus and the 74LS245 bus transceiver, the root of the challenge. As we have noted, the transceiver is always tied to the memory data bus (pins 2-9 in the schematic) and outputs data onto the memory bus during any write cycle. How might we work around this constraint or, failing that, leverage this fact? One idea often considered attempts to “race” the ‘245 transceiver. Essentially, place the data you want to store on the bus, delay issuing the write until the last possible moment, enable the write line, which then enables the ‘245 buffer, and then hope that the memory latches your data before the ‘245 flips over and places its data on the bus.

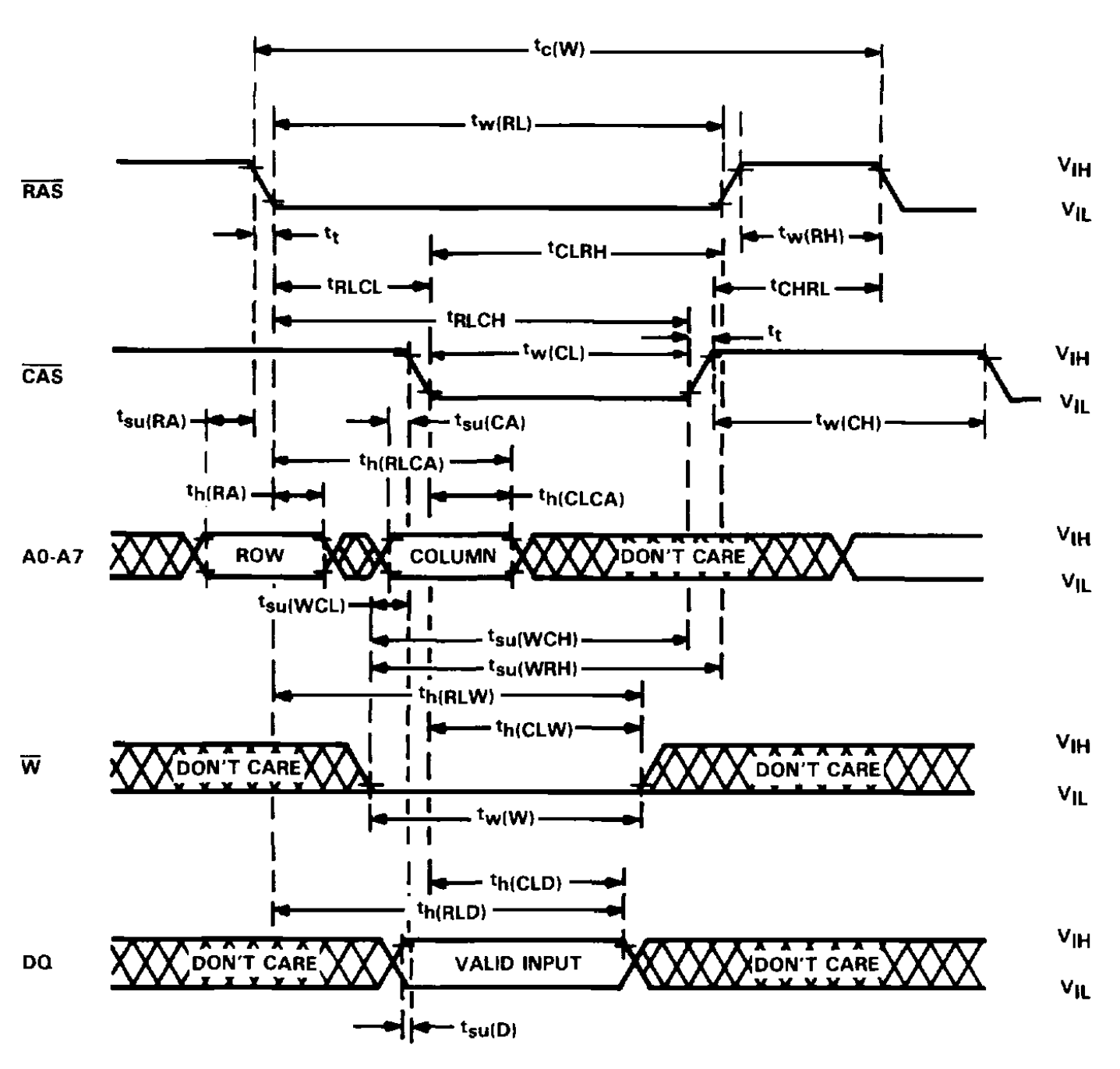

First, let’s review DRAM access by referencing the timing diagram for a Texas Instruments 4464 64kbx4 Dynamic Random Access Memory (DRAM) in Figure 2, of the same type often installed in the CoCo3. For various reasons (pin count reduction, moving the multiplexing function out of each IC into a common area, supporting faster memory access options), DRAMs expose multiplexed addressing pins. Think of a DRAM as a matrix of memory cells arranged in a row/column configuration. To read or write DRAM memory, one places the row portion of the address onto the multiplexed address lines, and activates the “row address strobe” (CAS) signal. The DRAM latches the row value and calls up that row in the memory matrix. A short time later, one places the column portion of the address onto the same pins and activates the “column address strobe” (CAS) signal. The DRAM then reads or writes that portion of the matrix.

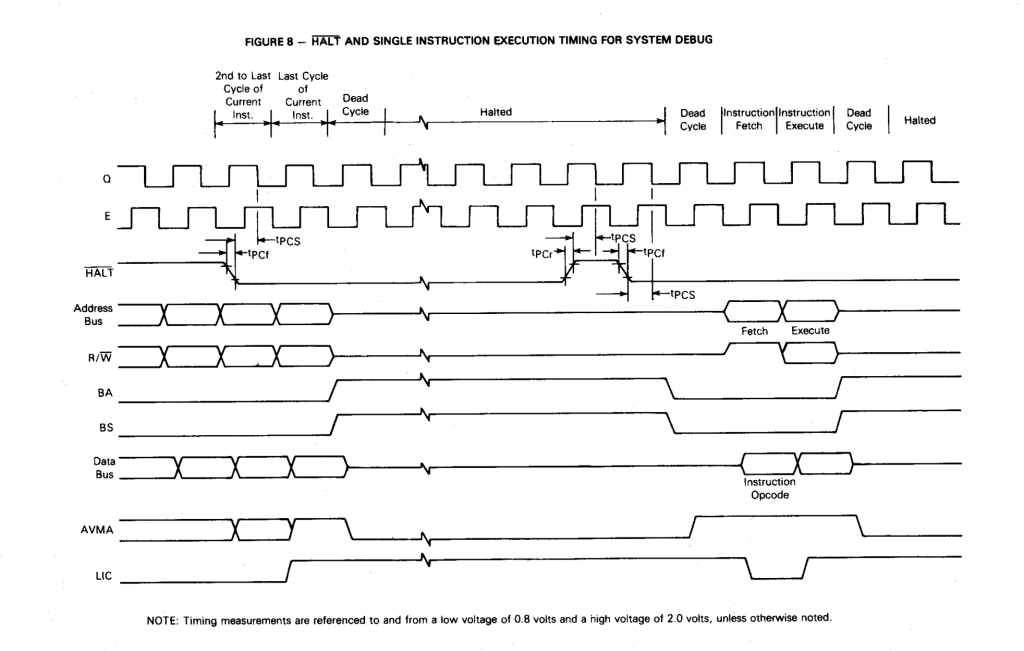

There are rules to follow. Notice the “write” signal, denoted by a W with a line over it (active low). Right above it and below it, focus attention on the arrows showing th(CLW) and tw(W). th(CLW) illustrates the amount of time after the CAS line falls until the write line can go inactive (essentially, the time it takes for the actual memory write to occur). Tw(W) illustrates the duration the write signal must be held low. For a 150nS (nanosecond) speed grade DRAM, Tw(W) and th(CLW) are both specified as 45nS minimum (the timing diagram misleadingly suggests the write line must be pulled low before CAS goes low, which is not true).

On a CoCo3 running in FAST mode, we have ~280nS to perform a CPU write (560nS for a complete cycle at 1.78MHz, and the CPU gets half of that). Assuming the write will complete at the end of the CPU cycle, that means we need to enable the write function 45 nS before that, or at 235nS. This poses a problem. Assuming that the address starts getting set up at the beginning of the CPU portion of the clock cycle and we don’t immediately activate the write line, the DRAM will perform a read activity, culminating in valid data on the DRAM data lines 150nS after the start of the cycle. We weren’t going to activate the write line until 235nS into the cycle, so now the data on the memory bus (from the DRAM) will be fighting the data we’ve placed on the bus. If that isn’t enough of an issue, when we activate the write line, the 74LS245 will enable data on its output lines approximately 25nS after doing so. But, we need stable data for 45nS after activating the write line. The good news is that we’re no longer fighting the DRAM on the bus, but we end up fighting the ‘245 on the bus for 20nS before the end of the cycle (45ns – 25ns).

Perhaps, if we choose not to fight the 74LS245, we may be able to leverage it do help our cause. Enter Darren Atkinson once again. While I was preparing and testing the hardware solution for this challenge, Darren (who had started conversing with me on the topic some weeks ago) started considering options and dropped a deceivingly simple email to me on the topic a week ago. Between us, we have refined this idea, which I now present to the public on behalf of Darren and myself.

The essential idea: We can’t keep the 74LS245 off the memory bus during a write cycle, but what if we can leverage it to put the data we want onto the bus?

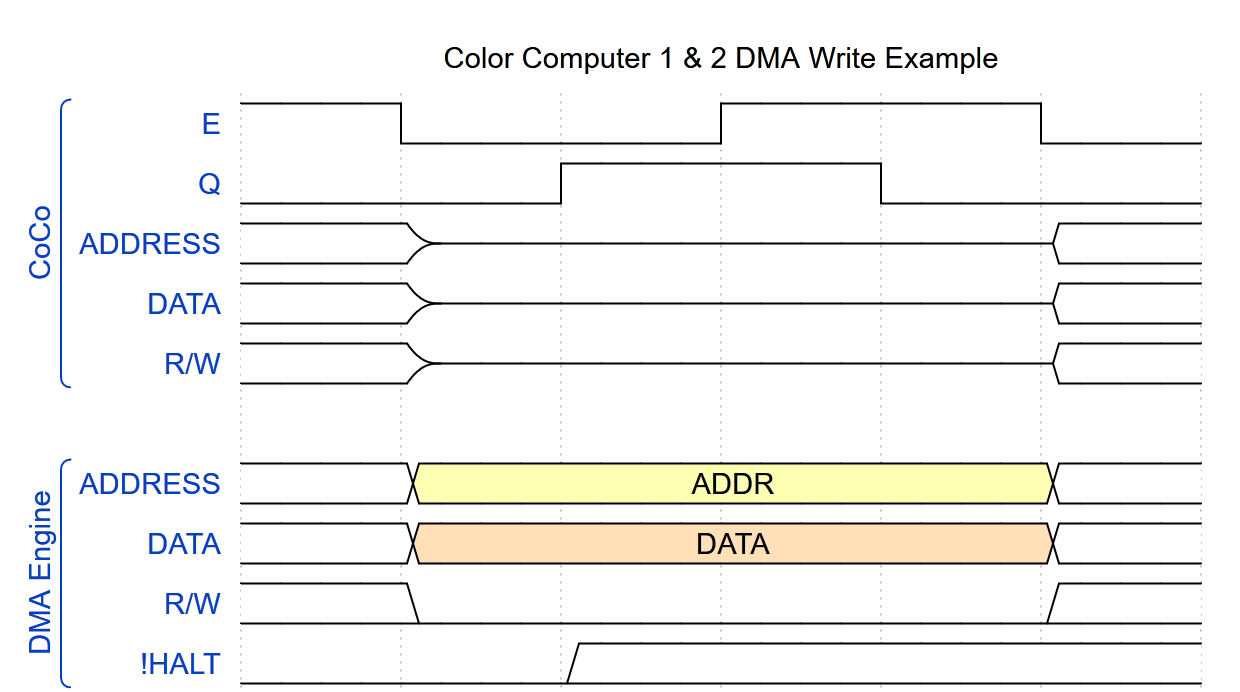

Let’s illustrate the CoCo1/2/Dragon timing diagram. I should take moment here to sing the praises of the WaveDrom (https://wavedrom.com) Online Timing Diagram Editor:

Shown here, the address and data lines are activated by the DMA engine during the entire computer cycle, along with the enabled R/W line. The CPU essentially removes itself from the bus during the cycle. If we consider the Color Computer 3, we know from Figure 1 that the 74LS245 will output data onto the bus during a write cycle, but we also know that the memory is not connected to the CPU during the first half of the cycle (the GIME accesses memory during this time). What if we could store a value on the one side of the ‘245 during the first part of the clock cycle, and then have the transceiver push that value onto the bus during the latter half of the cycle? That would be awesome, except the only thing connected to the other side of the bus transceiver is the CPU, now removed from the bus, and some short PCB traces. It doesn’t look too promising.

Since you know where this is going, let’s dig a bit deeper into electronics. In hardware design, we like to think of computers as digital systems, where the only thing that matters is high or low, 1 or 0, +5Volt or ground. That’s great, but digital computers are inherently analog in nature, regardless of how much we want to ignore that. In analog circuits, everything has an inherent capacitance, or the ability to hold a voltage charge for a period of time. As well, everything has an inherent resistance, or a desire to slow down the flow of electrons. In fact, this capacitance and resistance lies at the heart of DRAM. Unlike static ram, where the memory cell holds its value until power disappears, a DRAM cell is basically a small capacitor, holding a bit of charge (or not) that represents the value desired in that memory bit location. The inherent resistance in the DRAM cell slowly “bleeds” off the voltage in the capacitor, which is why DRAM must be “refreshed” every so often. Delay the refresh, the resistor bleeds off too much charge, and the memory is lost.

What if we treat the external pins of the CPU data bus, the small traces between it and the 74LS245, and the connected pins of the bus transceiver as a set of 8 small memory cells? They are crude and they will lose their value very fast, but perhaps they will hold a value long enough for us to accomplish our goals.

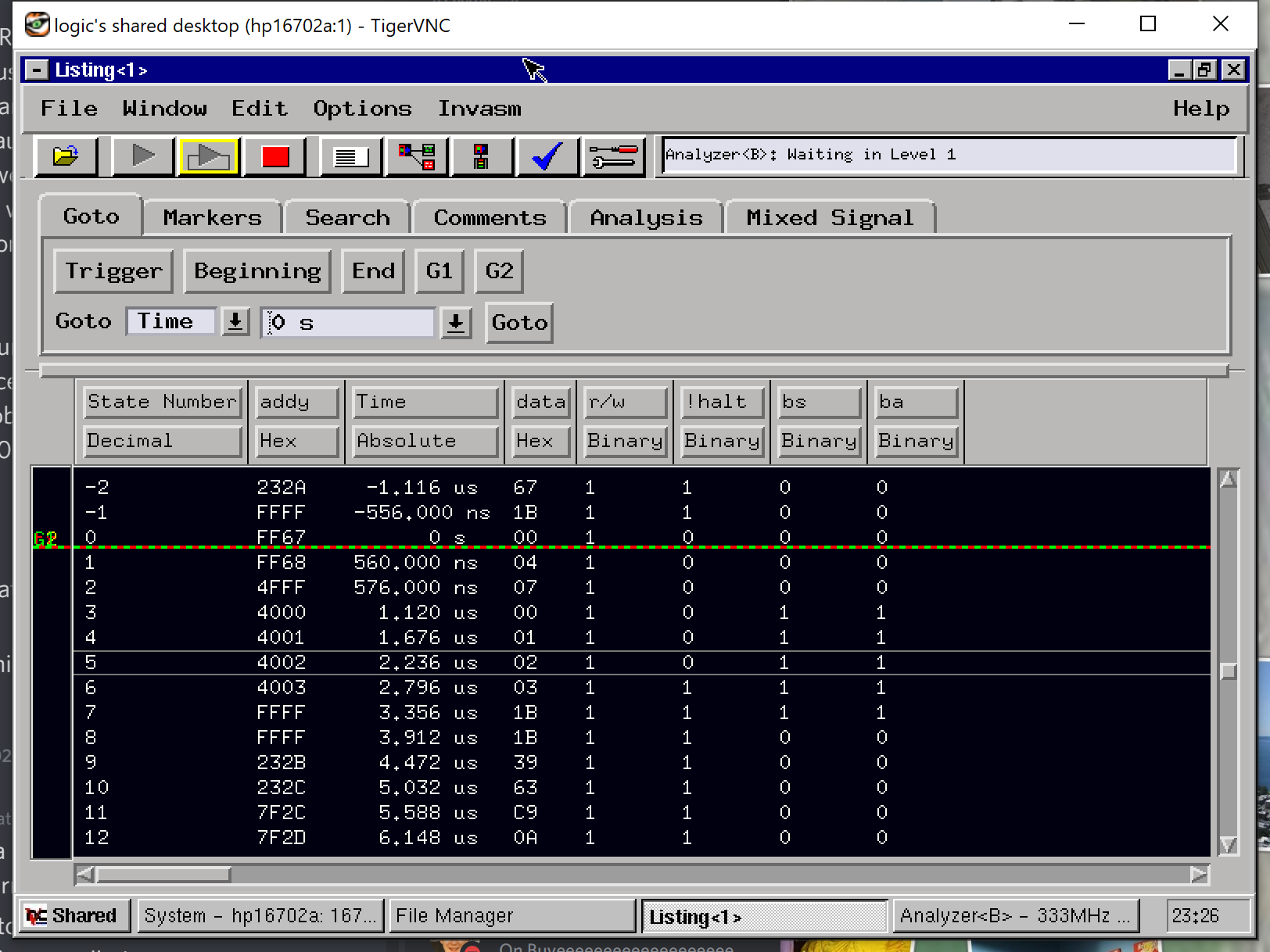

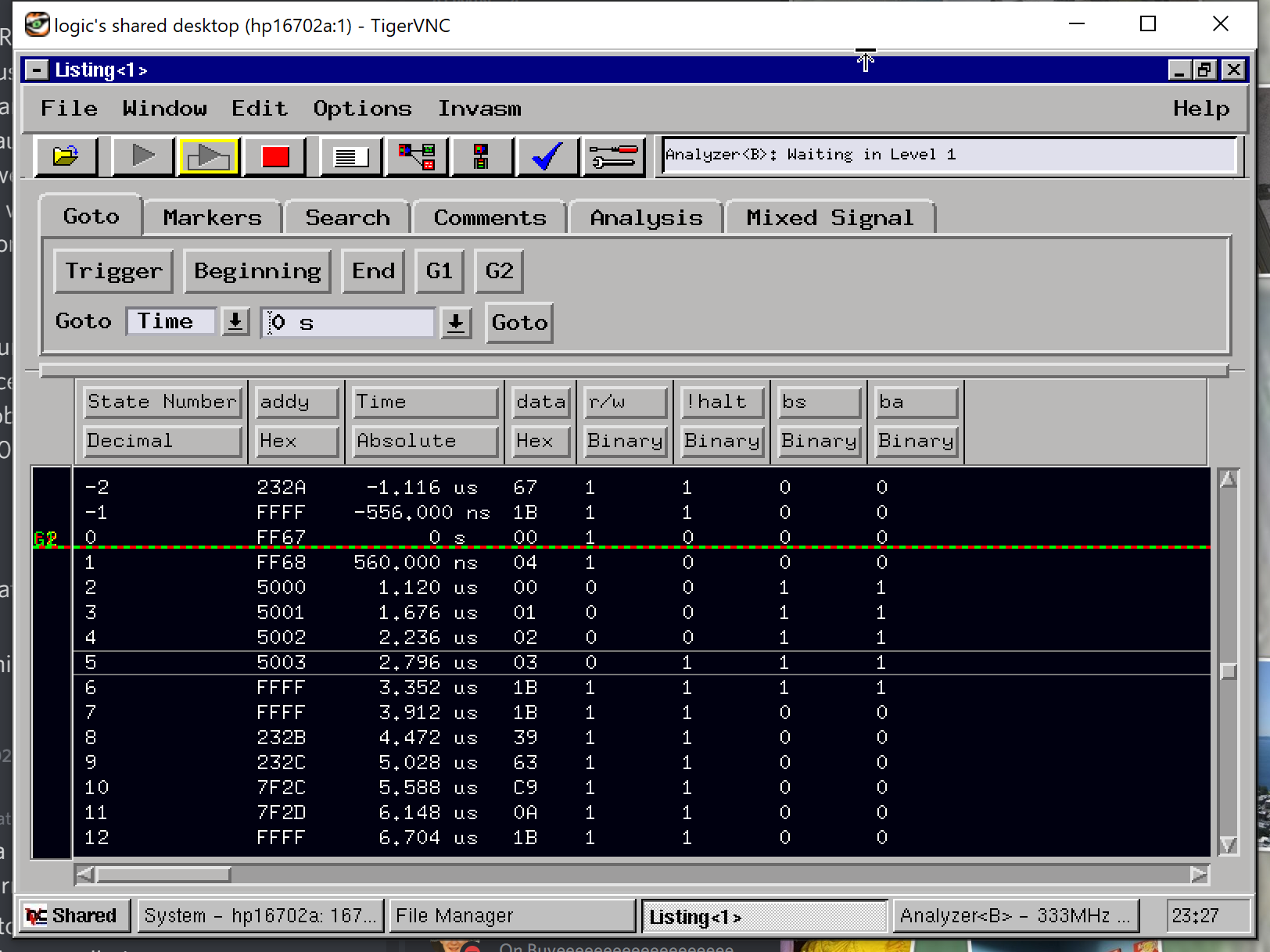

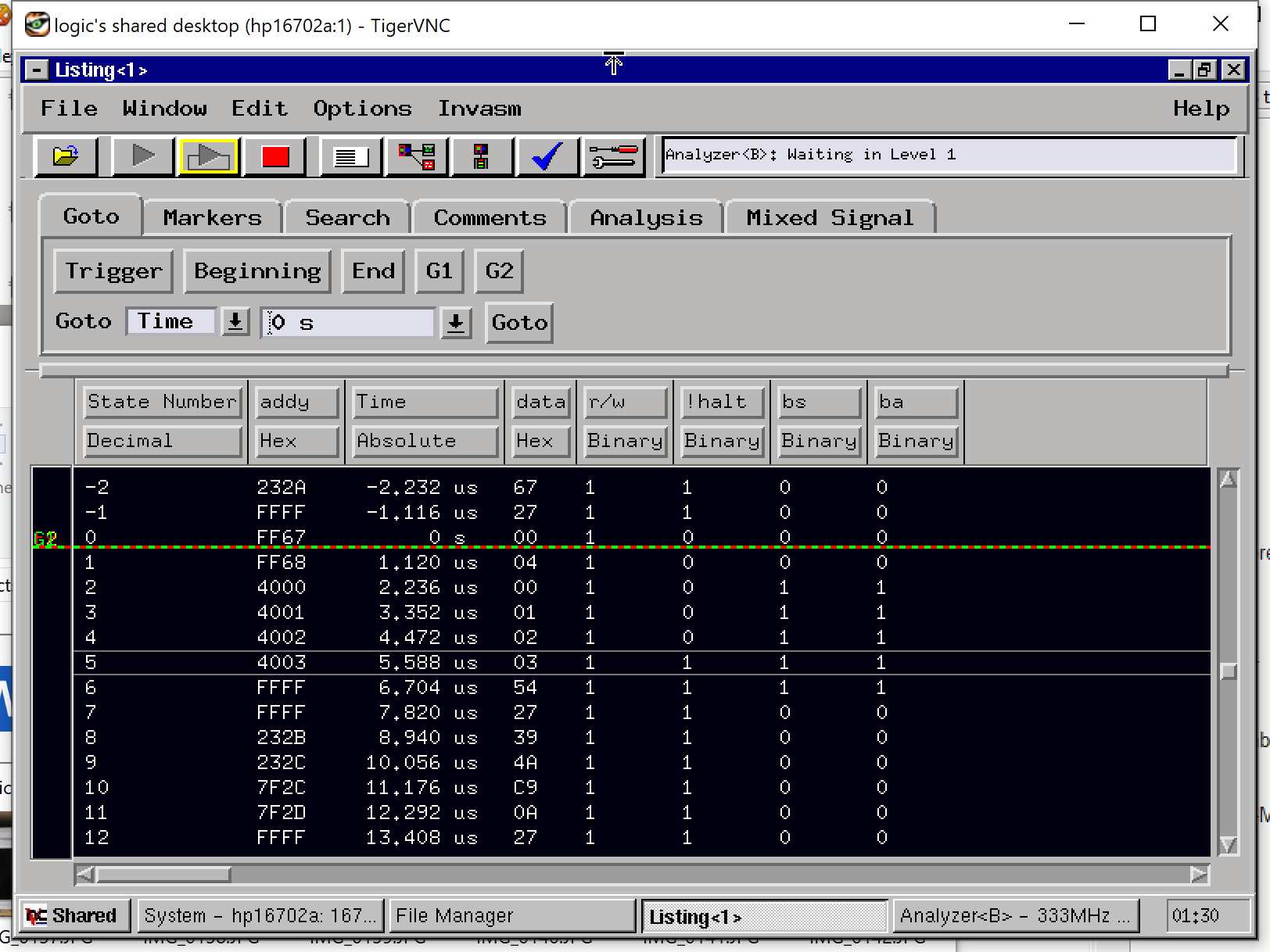

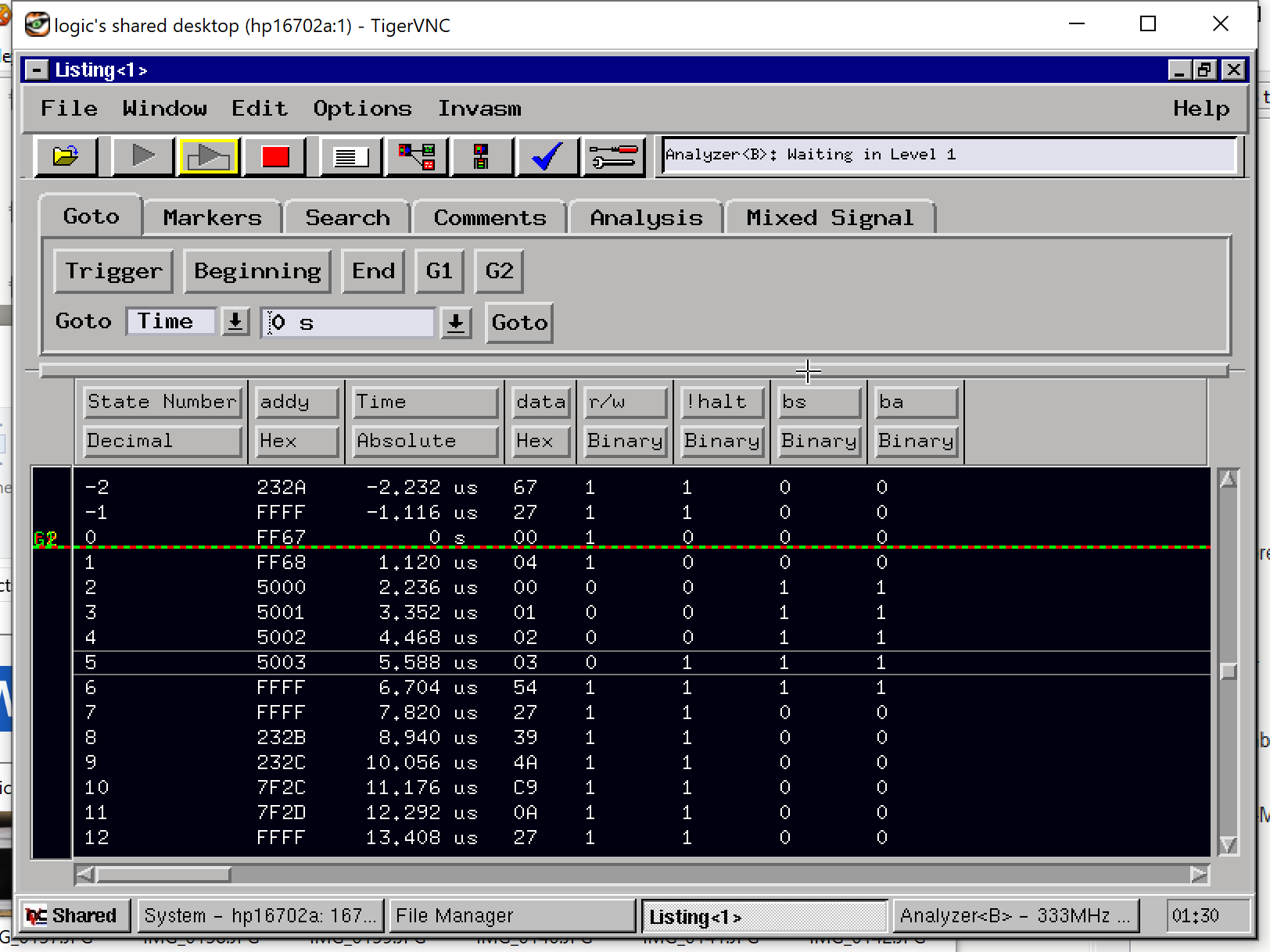

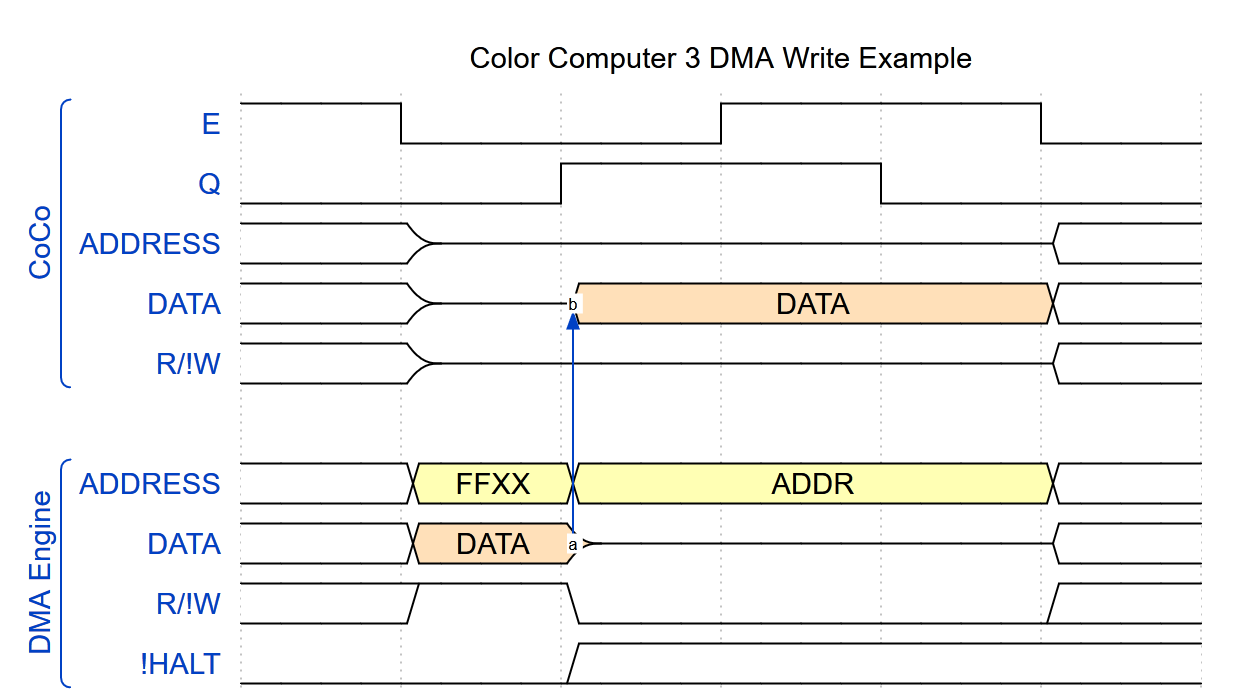

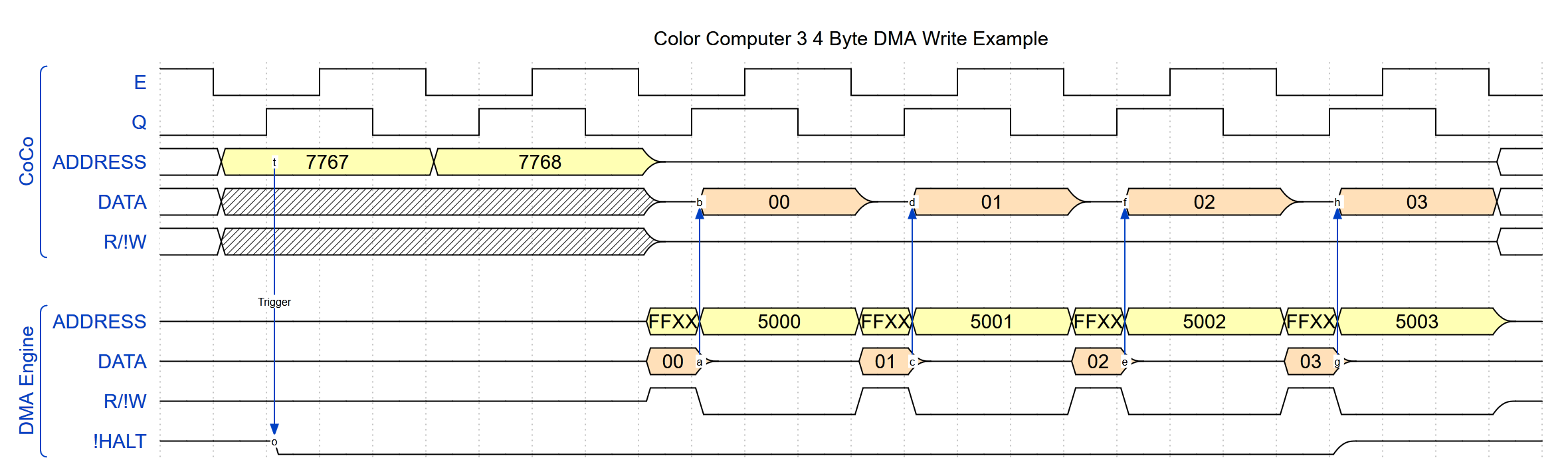

Let’s revise our timing diagram with this idea. Instead of placing data on the data bus for the entire cycle, let’s place data onto the bus during the first quarter of the cycle, while we also hold the read line high. With the CPU tri-stated (though the CPU pins are still physically connected to the bus, and so can participate in our memory cell idea), the bus transceiver dutifully captures that data and places it onto the now tri-stated data pins of the CPU (and the traces between the bus transceiver and the CPU). Then, a quarter cycle later, the DMA engine pulls the data off the bus, and signals the system to write data, placing the correct address onto the address bus that time as well. At this point, the 74LS245 bus transceiver should dutifully read the CPU data bus pins, which now hold the residual charge placed there moments before. The transceiver, locked into the “write” mode, will then amplify that charge and place it onto the memory bus, where the DRAM can access it.

Outcome: Success! It turns out that our little 8 bit memory cell can charge up in 140nS or less and will hold its value for at least 840nS (3/4 of a cycle in SLOW mode).

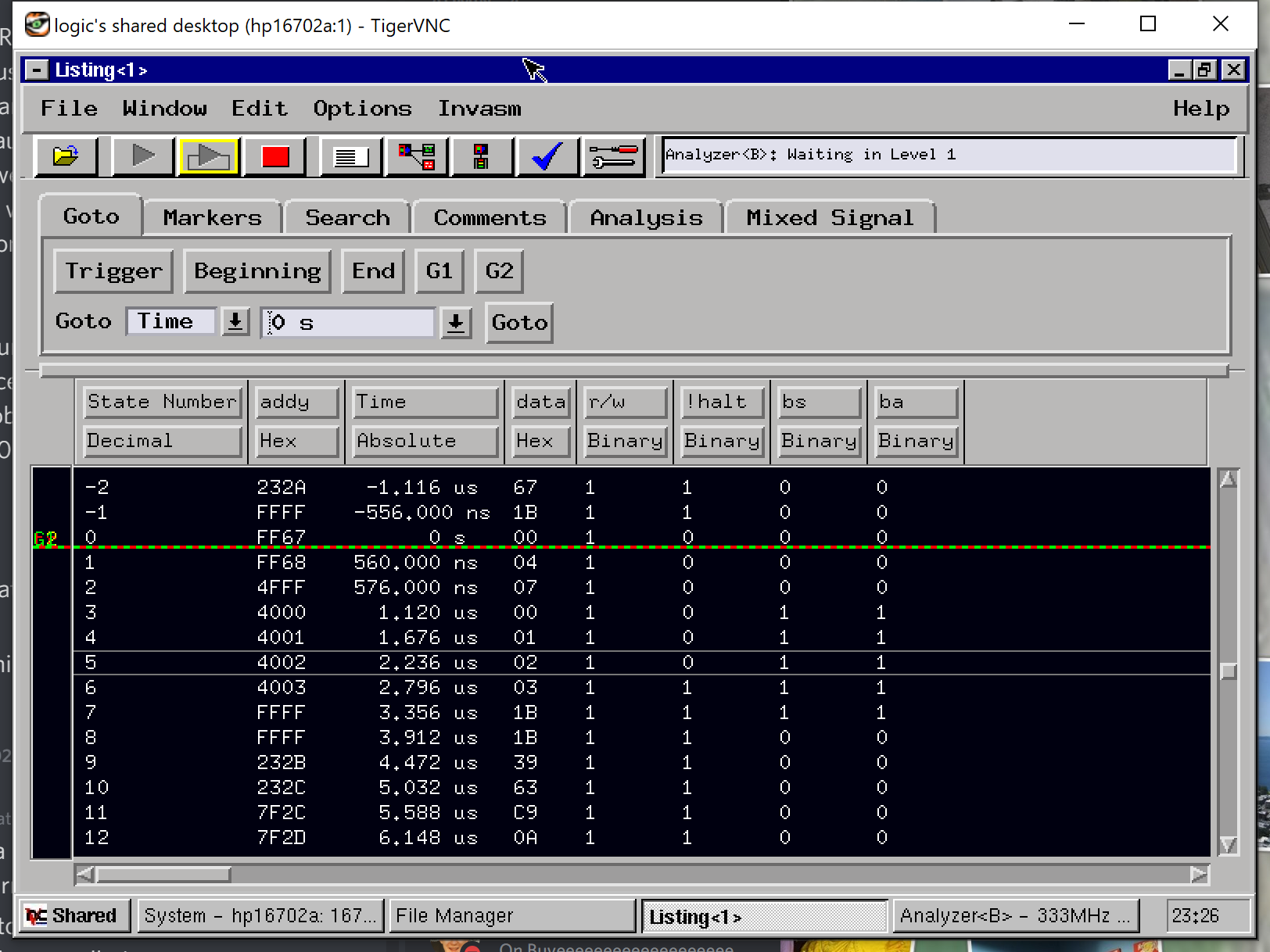

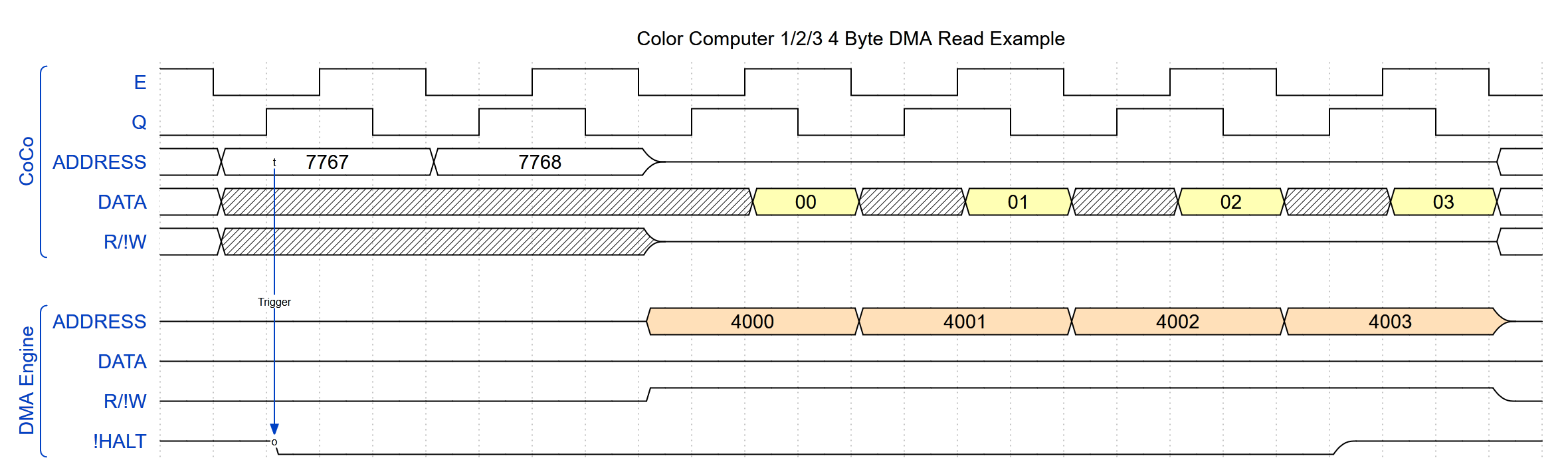

Let’s step back and look at the entire DMA process, both for reads and also for writes, with special emphasis on the changes needed for the CoCo3. As you will recall, all Color Computers share the same read operations, placing the system into DMA mode and reading memory:

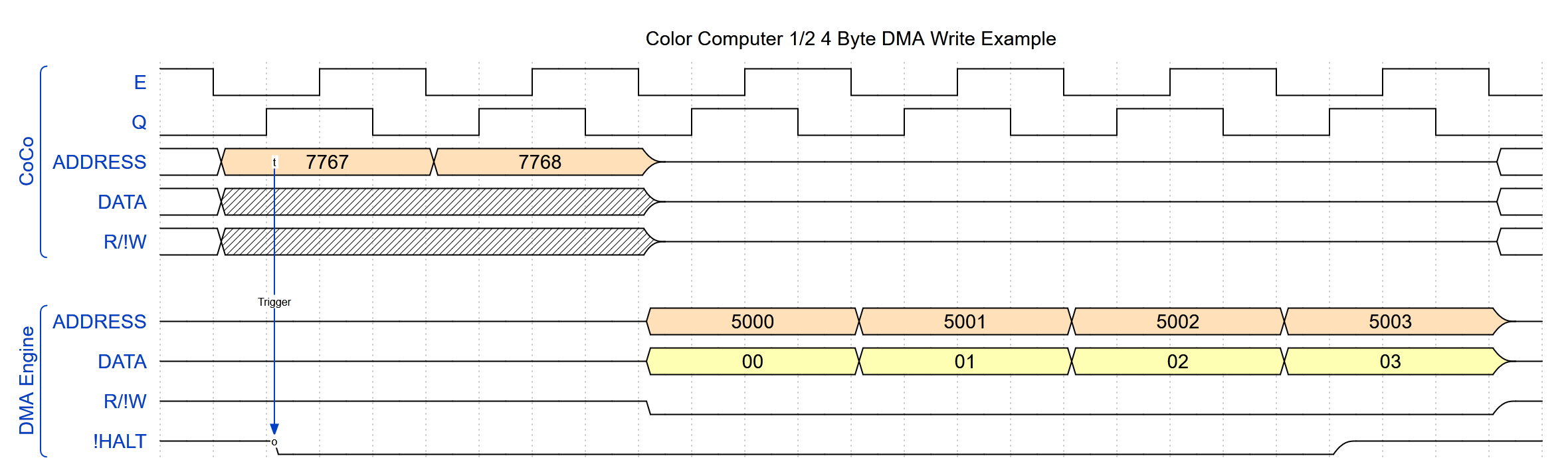

For DMA write operations on the CoCo 1 and 2, things change very little. We place data on the data bus and signal a write to memory:

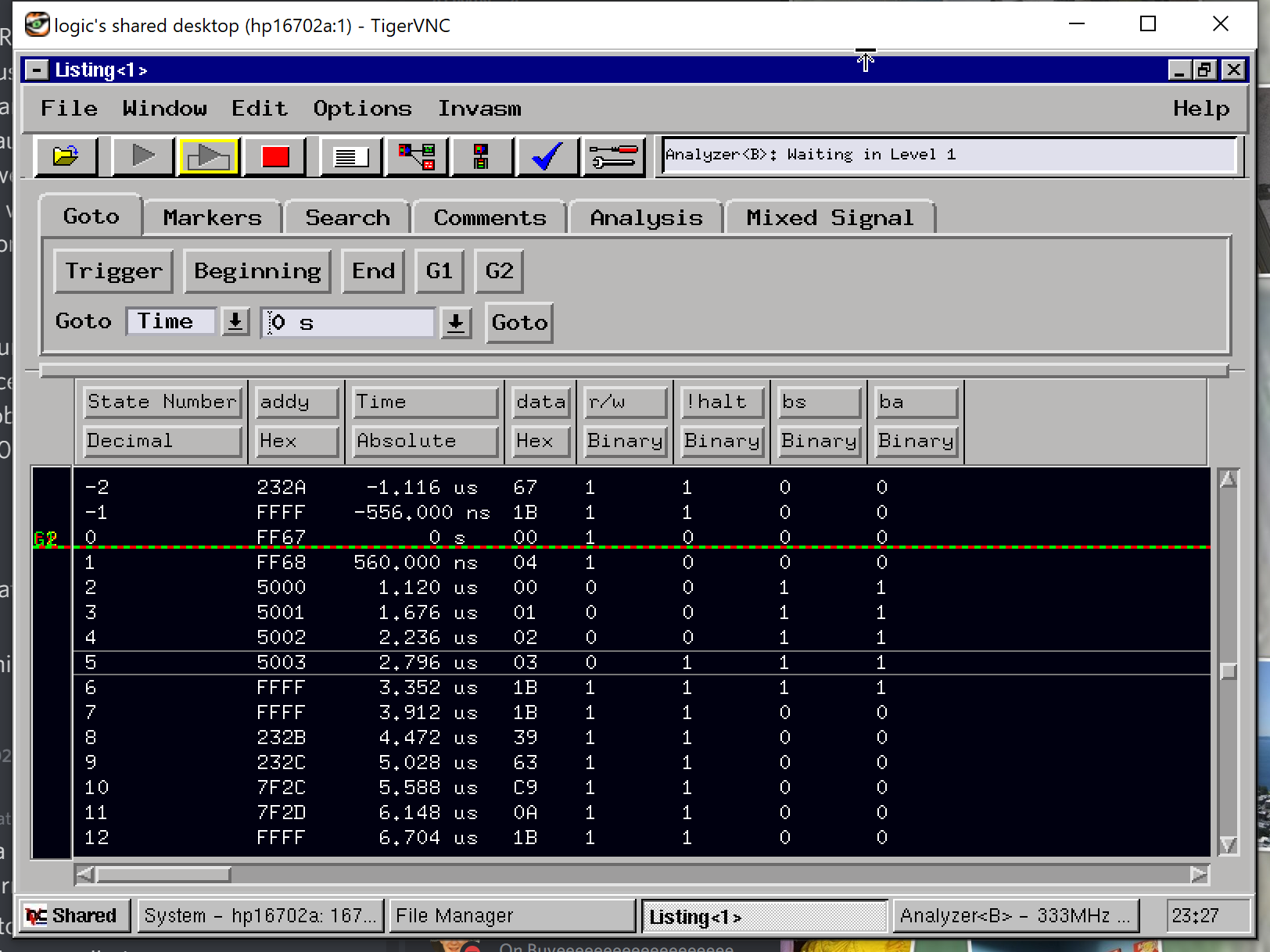

Finally, let’s show how this changes slightly to accommodate the CoCo3:

Astute readers have already started wondering: Why is $ffxx placed on the address bus at the beginning of the cycle? Why can’t the desired memory address be placed on the address bus for the entire cycle? To answer that, let’s look at all the items that can place values on the data bus. On the CoCo3, the bus transceiver, the two (2) Peripheral Interface Adapters (PIA), the cartridge port, and the GIME can output data on the bus. Let’s further focus on the first quarter of the CPU cycle, when our DMA engine has placed data on the bus and a read operation has been requested. We already know the 74LS245 bus transceiver is pulling data from the bus, not writing to it. The PIAs specifically gate their reads with the E clock and tri-state the data bus during the low half of the E clock. That leaves the GIME as a possibility.

Interestingly, the data path from the CPU to RAM and the data path from RAM to the CPU (or our DMA Engine) differ, which may surprise some. During write cycles, there are two bus buffers (74LS244) that shepherd data from the data bus into one (1) of the two (2) memory banks (the odd memory locations are in one bank, while the even ones are in another). But, all RAM read accesses pass through the GIME enroute to the CPU. Page 36 stipulates the GIME controls “granting access to the processor during the high time of E (CPU portion)”, which suggests the GIME acts like the PIAs. However, experimentation suggests this is slightly incorrect (or at least somewhat vague).

Since all RAM read accesses travel through the GIME data pins to the CPU, the GIME is allowed to place data on the data bus during any RAM read access. When the Color Computer 3 is configured for an all-RAM memory map, only read accesses to $ff00-$ff7f (I/O region) or $fff0-$ffff (CPU vectors) would be serviced elsewhere. The former would be handled by the PIAs and the cartridge port peripherals, while the latter always reads from onboard ROM. Normally, we would expect the data pins to be inactive during the low portion of the E clock cycle, when the CPU is not accessing the bus. However, possibly due to the need to reduce logic complexity, the GIME tri-states its data bus anytime a write is requested or the above address blocks are accessed, regardless of the state of the E clock cycle. Thus, to ensure the GIME stays off the data bus during the read portion of our DMA write activity, we select an address in one of these areas. We did not experimentally test, but we assume that if a memory map with ROM is selected, all ROM memory locations will also force the GIME to tri-state its data pins (the DMA engine has to select a suitable address given the most restrictive set of requirements/memory map).

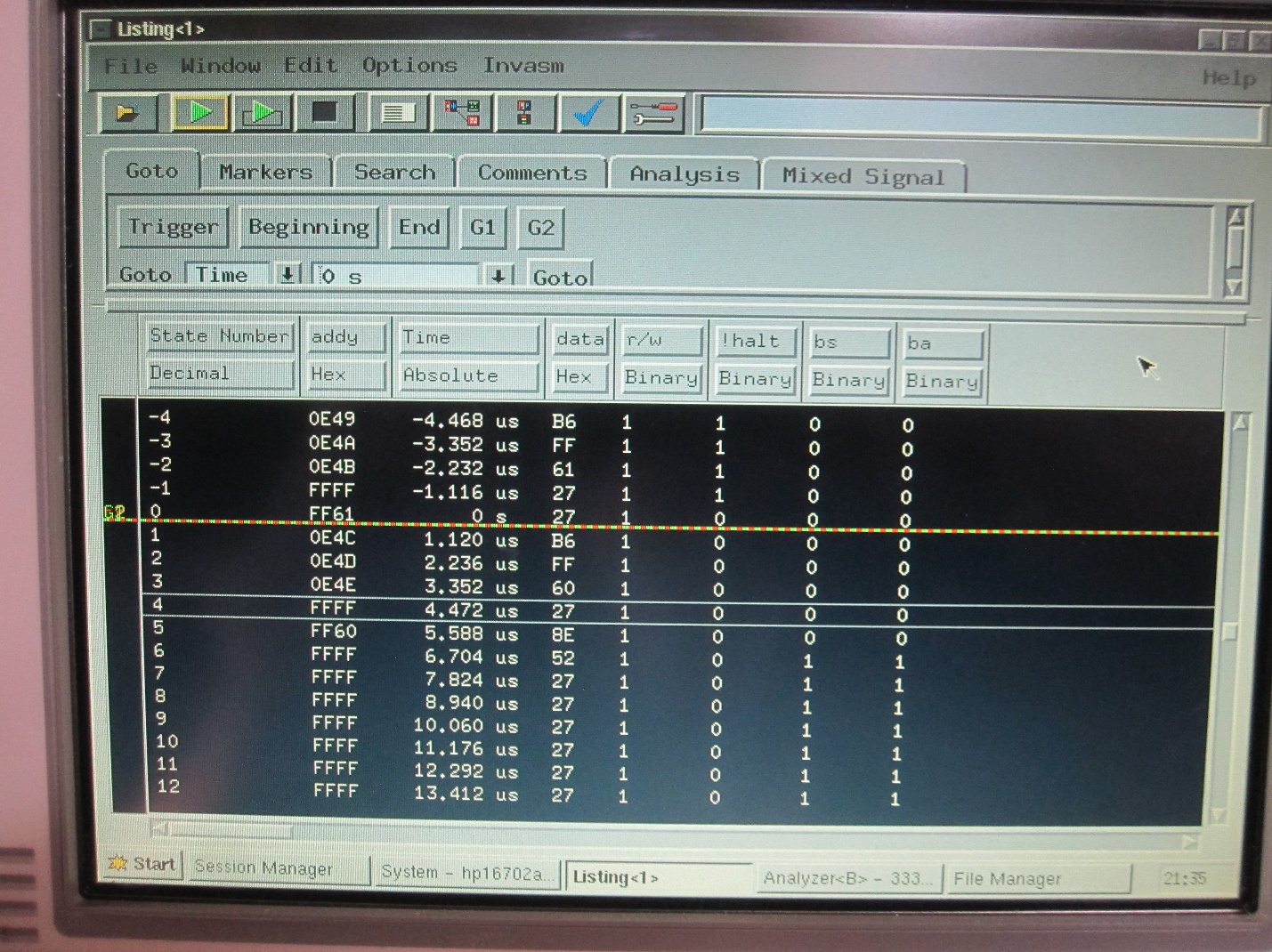

As a further note, there is one other portion of the memory map that forces the GIME off the bus during a read cycle. The data sheet for the MC6883 Synchronous Address Multiplexer (SAM), of which the GIME partially emulates, denotes locations $ffe0-$ffef as “Reserved for future MPU enhancements. Do Not Use!” (emphasis in original document). To validate this information, a special version of the DMA Engine was created that allowed the “read address” value to be manipulated by the CoCo3 and all 65536 address values were tested.

We must perform more testing to ensure correct operation across various CoCo3 variations. As well, though we have tested with both MC6809E and HD63C09E CPUs, using stock memory, DRAM-based memory expansions, and SRAM-based memory expansions; various Dynamic Address Translation (DAT) logic options like those found in the Boomerang E2 and Triad+ have yet to be assessed. Initial results, though, look promising, and we are eager for others to replicate Darren’s and my findings.

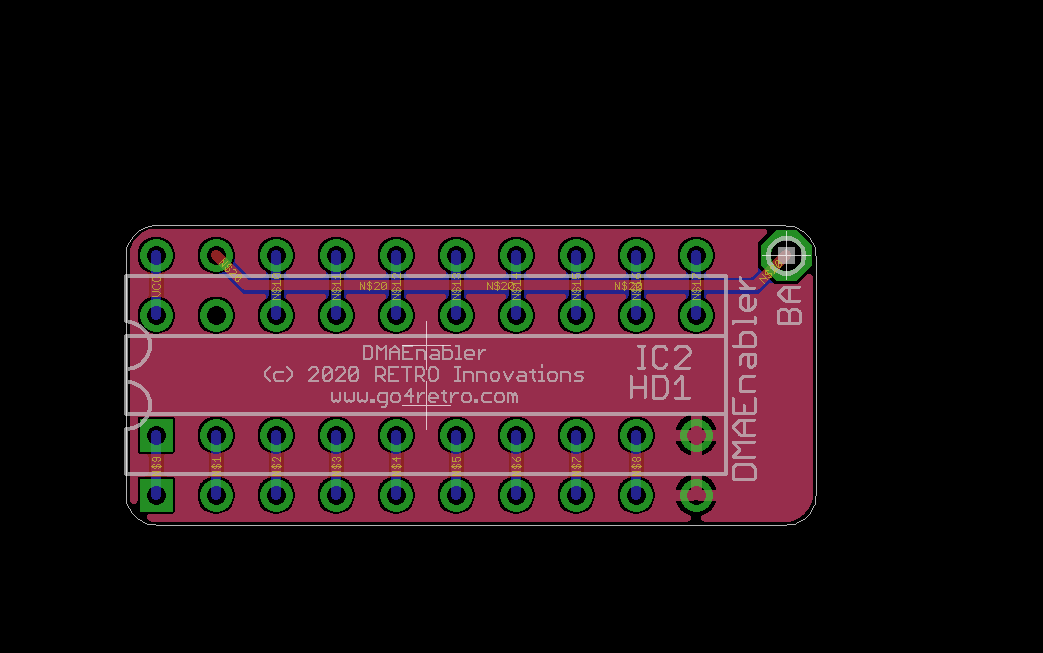

I would like to take this opportunity to once again thank Darren Atkinson for discovering this solution. Though we both contributed to the final implementation, I can honestly say I would not have considered this path had he not done the initial investigation. As well, I appreciate that, unlike years ago when electronic testing equipment and design tools and services were completely out of the hobbyist’ reach, items like surplus test equipment and PCB CAD software and manufacturing services allow easy investigation into ideas like these.

Now, since we have conquered all of the Color Computer variants, can we do anything else with this DMA idea?